[Image 1]

Introduction

Hey it's a me again @drifter1!Today we continue with the Parallel Programming series about the OpenMP API. I highly suggest you to go read the previous articles of the series, that you can find by the end of this one. The topic of this article is how we Target Devices, other than the host CPU, as well as more on Work Distribution!

So, without further ado, let's get straight into it!GitHub Repository

Requirements - Prerequisites

- Basic understanding of the Programming Language C, or even C++

- Familiarity with Parallel Computing/Programming in general

- Compiler

- Linux users: GCC (GNU Compiler Collection) installed

- Windows users: MinGW32/64 - To avoid unnessecary problems I suggest using a Linux VM, Cygwin or even a WSL Environment on Windows 10

- MacOS users: Install GCC using brew or use the Clang compiler

- For more Compilers & Tools check out: https://www.openmp.org//resources/openmp-compilers-tools/

- Previous Articles of the Series

Recap

A parallel region is defined using a parallel region construct:

#pragma omp parallel [clause ...]

{

/* This code runs in parallel */

}int i;

#pragma omp parallel for private(i) [clause ...]

for(i = 0; i < N; i++){

...

}#pragma omp sections [clause ...]

{

/* run in parallel by all threads of the team */

#pragma omp section

{

/* run once by one thread */

}

#pragma omp section

{

/* run once by one thread */

}

...

}#pragma omp single#pragma omp critical [[(name)] [ hint (hint-expr)]] [clause ...]

{

/* critical section code */

}#pragma omp atomic [read | write | update | capture] [hint(hint-expr)] [clause ...]

/* statement expression*/

#pragma omp task [clause ...]

{

/* code block */

}Explicit barriers can be placed by using the barrier directive:

#pragma omp barrier#pragma omp flush [(list)]#pragma omp for simd [clause]Device Targeting

Until now in the series it was shown how the OpenMP API can be used to make various programming constructs run in parallel on multiple threads that the "main" CPU of the system has. This was made possible by various directives/constructs that OpenMP offers to the programmer. But, OpenMP isn't limited on execution on the CPU!

OpenMP offers device targetting (or offloading) directives that can be used to make parts of the program execute not only on the CPU of the computer itself, but also on other hardware attached to it, such as graphics cards.

Declare Target Construct

The pair of directives that has to be used in order to define that a section of the program has to be compiled for a different device is:

#pragma omp declare target

/* target device variables and functions */

#pragma omp end declare targetIts important to note that the code is not executed when using declare target!

Target Construct

In order to map variables to a device data environment and execute statements on that device, a target directive has to be used:

#pragma omp target [clauses]

{

/* device code */

}- device(device_number) - Specify the device that executes the code. If not include the code executes on the default device.

- map() - Maps data between the host and device. Types of mappings:

- alloc: variables - Specify variables to be allocated (they will be uninitialized)

- from: variables - Specify that the variable values should be copied from the host

- to: variables - Specify that the variable values should be copied back to the host

- tofrom: variables - Combination of from and to. Default Mapping

- if(expression) - To make the device offloading conditional. If the expression is evaluated to false the code is executed on the host, otherwise on the device.

- [lowerbound : length]

- [lowerbound :]

- [: length]

- [:]

Device Management Routines

OpenMP offers the following Runtime Routines for Device Management:

- omp_set_default_device() - specify the default device

- omp_get_default_device() - returns the default device id

- omp_get_num_devices() - returns the number of devices

- omp_get_device_num() - returns the device id of the device executing

- omp_get_initial_device() - returns the device number of the host device

- omp_is_initial_device() - check if the device executing the code is the initial/host device

Target Data Directive

To construct a device environment only, a target data directive has to be used:

#pragma omp target data [clauses]#pragma omp target data [clauses]

{

#pragma omp target

{

/* device code */

}

}Target Enter and Exit Data Directives

Using the Target or Target Data Constructs, the data allocated for the device is automatically deallocated at the end of the construct.

If the memory needs to be more persistent then the following needs to be used:

#pragma omp target enter data [clauses]

/* device environment */

#pragma omp target exit data [clauses]- map(from:) in the target enter data directive to copy values from the host

- map(to:) in the target exit data directive to copy values back to the host

Target Update Directive

In order to synchronize the data between the device and host memory without having to deallocate it, a target update directive is used:

#pragma omp target update [clauses]- from(list) - Copy Values from the Host to the Device

- to(list) - Copy Values from the Device to the Host

Work Distribution

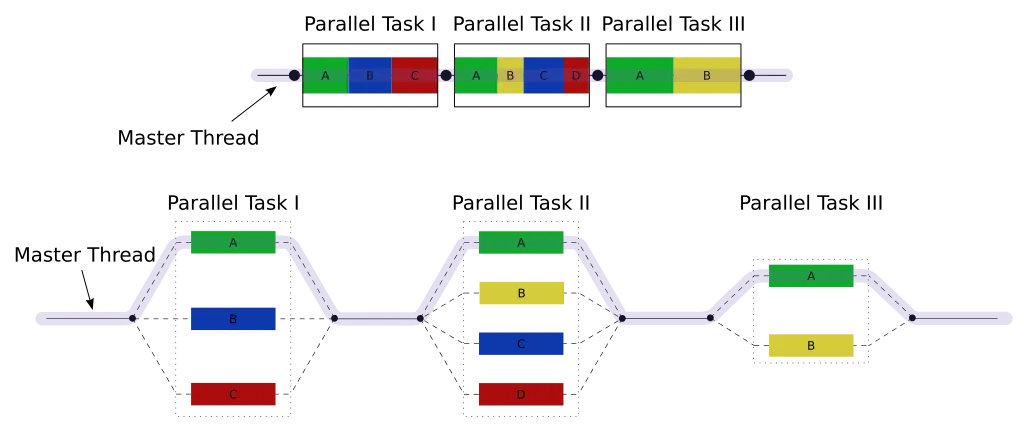

OpenMP allows creating a single team of threads using parallel construct that has been constantly used during this series, but also other ways of distributing the work.

Teams Construct

Using a teams construct multiple teams of threads (a so called league of threads) are created:

#pragma omp teams [clauses]

{

/* code to be executed by each team */

}- private, firstprivate, shared, default - specify a list of variables with specific data-sharing

- reduction - specify the type of reduction to be applied

- num_teams - specify the number of teams to be created

- thread_limit - specify the limit for threads to be created

Distribute Construct

In order to distribute for loops across teams inside a teams region, a distribute construct is used:

#pragma omp distribute#pragma omp teams distribute

/* for loops */For example, in order to create a league of teams that executes a parallel for loop on a different device using simd instructions, the following has to be used:

#pragma omp target teams distribute parallel for simd

/* for loop */I will return to this, after we cover the CUDA API, so that we can offload code into an Nvidia GPU!

So, no example(s) today!

RESOURCES:

References

Images

Previous articles about the OpenMP API

- OpenMP API Introduction → OpenMP API, Abstraction Benefits, Fork-Join Model, General Directive Format, Compilation

- Parallel Regions in OpenMP → Parallel construct, Thread management, Basic Clauses, Example programs

- Parallel For Loops in OpenMP → Parallel For Construct, Iteration Scheduling, Additional Clauses, Example programs

- Parallel Sections in OpenMP → Parallel Sections Construct, Serial Section, Example Program

- Atomic Operations and Critical Sections → Process Synchronization Theory, Critical Section Construct, Atomic Operations, Example Program

- Tasks in OpenMP → Task Construct, Synchronization, Loops and Groups, Example Programs

- Locks and Barriers in OpenMP → Lock and Barrier Synchronization, Flush for Memory Consistency

- SIMD Instructions in OpenMP → SIMD, for loops with SIMD, Example Program

Final words | Next up

And this is actually it for today's post!There's a 99% probability that the next article about Parallel Programming APIs will be about Nvidia's CUDA API, but because of the whole drama surrounding Nvidia at the moment I might also get into OpenCL (which is basically the same but working on Nvidia and AMD Graphics Cards)...

See ya!

Keep on drifting!